If you are reading this a Happy Christmas and a New Year to you.

I hope your nearest, dearest and loved ones where ever they may be are safe and sound.

Every year I write a blog post about music, and my favourite tracks of the year. This year I thought I’d try something different. Music can take you to new places and introduce you to new people. So here’s a list of the live music I’ve seen this year (with illustrations):

February 5th:

Artiste: Slapper.

Venue: A pub in London.

Also featuring Bruno and the Outrageous Methods of Presentation and Scrotum Clamp.

It’s a source of great shame that I have not seen Slapper until now, bearing in mind I’ve known my good friend Sue who is their guiding spirit for more than 30 years. Slapper are an experience: performance art punk rock theatre? In French? And Spanish? An evening that will stay with me… Thanks for Sue and Hally for their ongoing moral and creative support (“making big things on zero budgets”).

March 12th:

Beethoven’s 6th Symphony (Pastoral) performed by The Sutton Philharmonic Orchestra.

Venue: Guildhall School of Music, London

A delightful, zesty performance of this classic. Heart warming…

April 2nd:

Artiste: Lyagas and Mutsumi Abe

Venue: a performance space somewhere in Tokyo.

Folk music from Eastern Europe and Greece played by fine Japanese musicians Lyagas. Thanks very much to fiddle player supreme Mutsumaki-san.

May 3rd:

Artiste: Otoboke Beaver

Venue: London

The best riotgrrrl act in Japan and the hardest working band on the planet. Fabulous!

21st July:

Artiste: Mariza

Venue: the Royal Albert Hall

The Queen of Fado in complete command…

16th August:

Artiste: WITCH FEVER

Venue: The Craufurd Arms in Wolverton.

Still my favourite UK band. Everything you want a rock band to be. Perfect venue too. I will return to Wolverton!

24th August:

Artiste: Rosalia‘s “El Mal Querer” at Pitchblack Playback.

Venue: The Riverside, London

Not really a concert, but it’s a great album…

3rd September:

Artiste: Tina Martini and Wayne Champagne

Venue: private event

Reunited… and it feels so good…

18th September:

Artiste: Shawn Colvin

Venue: The Stables

Shawn has written a lot of great songs and she played many of them this evening. I agree with her about electronic guitar tuners… away with these vile boxes!

30th September:

Handel’s Semele performed by Black Heath Halls Opera.

Venue: BlackHeath Halls, South London

Enjoyable and very slickly staged. Great work from the chorus!

21st October:

More traditional Greek folk music at a church hall in Shepherds Bush, London. Mutumaki-san is in charge once again.

26th October:

“9 to 5 The Musical” performed by the Mill Hill Musical Theatre Company

Venue: a Church Hall in Mill Hill London

Dolly Parton rules. The show must go on!

11th November:

Artiste: The Zemlinski Trio

Civic Centre, Berkhamsted.

Mendelssohn, Brahms and of course Beethoven

28th November

Artiste: CLT DRP

Venue: the Lexington, London

Dramatic, provocative feminist electropunk. Wild and exciting. Album of the year.

Here’s a live performance from CLT DRP earlier in the year:

Congratulations were also very good.

My idea of a good night out!

3rd December:

Pagliacci and Cavalleria Rusticana

Venue: the Royal Opera House, Covent Garden

Just a couple of everyday tales of lust, rage and slaughter. With a superb orchestra.

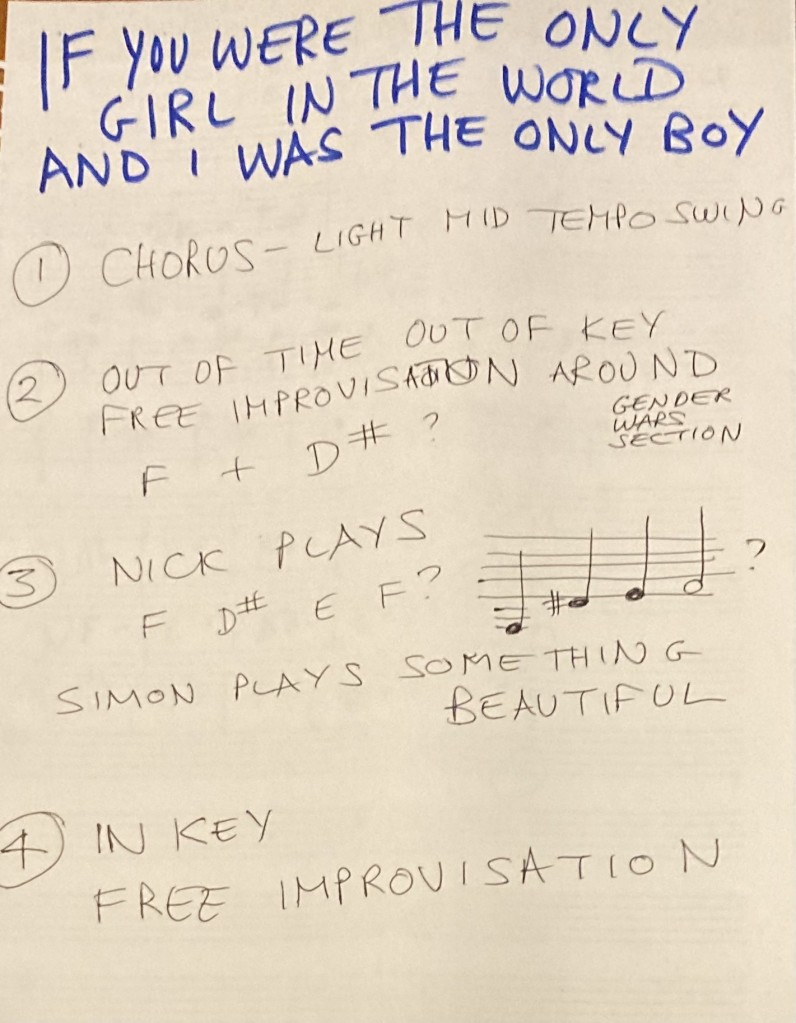

P.S. In August my great friend Simon Hopkins once again facilitated our annual session. This isn’t really a public performance as only neighbours, passers by and Simon’s nearest and dearest might have heard it. Thanks as always to him. I particularly liked this one, which is an accurate distillation of our approach…